Trust in Numbers: An Ethical (and Practical) Standard for Identity-Driven Algorithms

Who was the real Tarra Simmons? On November 16, 2017, she sat before the Washington State Supreme Court. The child of addicts and an ex-addict and ex-felon herself, she had subsequently graduated near the top of her law school class. The Washington State Law Board had denied her access to the bar, fearing that the “old Tarra” would return. She was asking the court to trust her to become an attorney, and the outcome of her case rested whether or not her past could be used to predict her future.

Algorithms that use the past to predict the future are commonplace: they predict what we’ll watch next, or how financially stable we will be, or, as in Tarra’s case, how likely we are to commit a crime. The assumption is: “with enough data, anything is predictable.” Over the last several years, headlines have repeatedly illustrated the influence of algorithms on human well-being, along with the inherent biases that affect many of them. Before we rush to embrace artificial intelligence algorithms, how can we rush to ensure that they promote justice and fairness rather than reinforcing already existing inequalities?

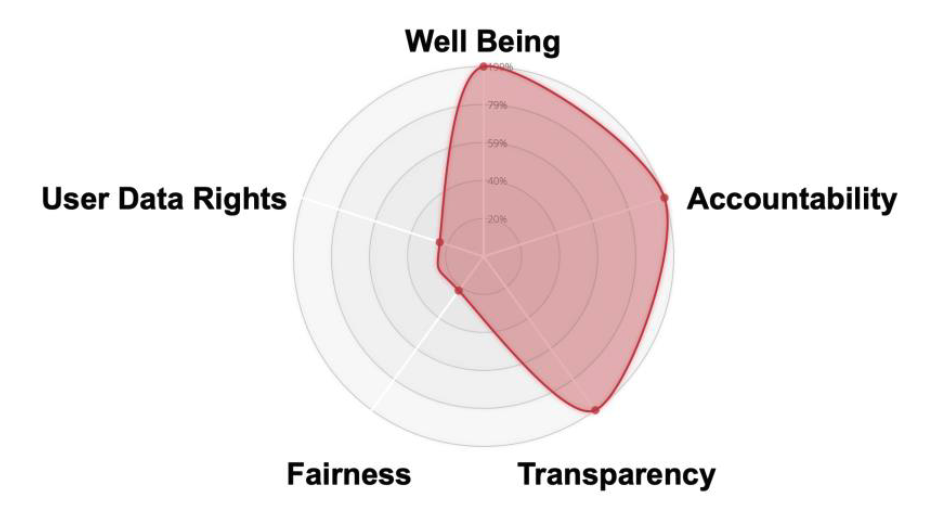

Recent work by IBM and the IEEE have helped to solidify an ethical approach to artificial intelligence. The following five tenets bear special attention as we seek to use technology wisely with an identity-centric mindset.

- Well-Being

The first tenet of a practical ethical approach is that Artificial Intelligence must put human well-being first. That sounds well and good, but how is well-being defined? Certainly, there is a core of universal truth that grounds well-being, but much of it is culturally and organizationally dependent. Difficult choices must be made – to protect one class, another may be negatively impacted.

Using a tool known as an ethics canvas, these choices and outcomes may be explored and centrally documented, allowing everyone in the organization to understand the moral implications of their actions in a clear way. - Accountability

Once the ethical choices are laid out using the ethics canvas above, organizations must ensure that they are accountable for meeting the documented ethical standard. This is done in two primary ways. First, all ethical decisions must be documented as solutions are designed and architected. This encourages designers, architects, and implementers to make choices that are ethical and provides a documented record of why each choice was made. Second, a feedback loop must be built into the solution so that the end-users (those directly impacted by the technology) have a method for holding us accountable as well. - Transparency

For users to hold us accountable, however, the reasons for our decisions must be transparent to those end users. True transparency not only answers the question of why, but it breaks down the how in a simple way. When applying this to artificial intelligence, it is critical to use language that is easily understandable to the end-user as technical jargon can quickly become complex. With some forms of artificial intelligence, this simplicity is more easily achieved than others. Take machine learning, for example — how can one know what factors an outcome was based on when the artificial intelligence learns for itself? One way to do this is to use open source tools such as LIME, which can help identify the reasoning and essential factors for why a particular solution was chosen as the right one. This transparency is more than just disclosure for disclosure’s sake — through clear communication of its reasoning process, it builds trust in AI itself. - Fairness

For AI to promote fairness, it must expose its own biases – a tricky prospect in the best of circumstances. One example of this is hiring or salary determination algorithms that use historical data, which tend to depress the value of women in the marketplace. These data sets reflect past cultural trends in which fewer women advanced as far in their careers as their male colleagues, due to familial roles or even past cultural stereotypes. This poor data becomes a self-fulfilling prophecy that locks women into the patterns of the past. When historically disenfranchised groups lack a voice in the formation of AI systems, biases in the data remain uncorrected and the result is likely to perpetuate the institutionalized bias that must be eradicated. Representation in the artificial intelligence and data science communities is weak for women and minorities (only 20% of AI faculty are women in universities worldwide) and for bias to be revealed we need their unique voices conducting research, speaking in conferences, and leading the next wave of AI projects. - User Data Rights

The other tenets of ethics find their true expression in user data rights. Rather than a nice to have, control over the data that makes up your identity is a fundamental human right. Technology that supports data user rights—UMA and consent management, data anonymization and pseudonymization– should be second nature for identity professionals. These techniques and tactics provide the firm grounding on which the ethical standards can be fulfilled.

Self Evaluation and Measurement

For an ethics standard to be practical, it must have some form of measurement associated with it. While no organization or person will ever be 100% ethical, it is important to conduct regular self-evaluations to measure progress along each access of the ethical standard:

The goal is to always be improving – to be more aware of the impact of AI on human well-being, to be working for accountability internally and externally, to be increasingly transparent with our decision making, to be seeking out other voices that might go unheard, and to be using standards to improve how we protect user data rights.

In the end, the court decided, unanimously, in Tarra’s favor. The court wrote in their judgment: “We affirm this court’s long history of recognizing the one’s past does not dictate one’s future.” That is a fitting mantra as the race to embrace new predictive technology, and the assumption that with enough data, anything is predictable, lingers.

Past patterns in aggregate can be helpful, but the individuals involved in the systems being built should not be lost. The seduction by the power and potential of technology, must not be allowed to outpace ethics. We must strive to use AI while keeping our humanity intact.